The future of variable autonomy for uncrewed systems

As published in ON&T December 2020 Edition

Uncrewed systems are used across all domains for applications including security, inspection, repair and maintenance of critical infrastructure, and scientific exploration. These are aimed at reducing the risk to human life and decreasing costs of operations, but this vision has yet to be materialise due to the current levels of infrastructure and personnel required to support missions.

The maritime environment is becoming more complex, with command teams needing to assimilate increasing volumes of data and information from uncrewed systems. Planning and controlling the mission requires the ability to monitor multiple parameters, prioritising and using information from many sources to make decisions.

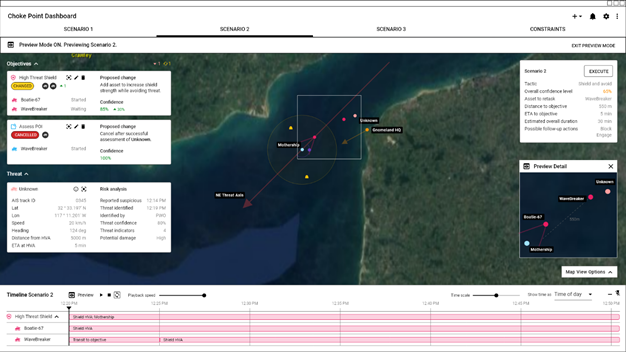

Image: Variable Autonomy will allow A.I. to assist operators in complex mission planning and execution, even when communications degrade

Early vehicles executed pre-programmed missions defined waypoints. Missions were planned using proprietary software specific to a vehicle, with no autonomy onboard. Overtime, the C2 software evolved, building on emerging Common Data Model concepts, allowing a single software suite to plan missions for vehicles from multiple vendors. This reduced support logistics and operator training burden, and being vehicle agnostic, the end customers could confidently procure “best of breed” assets leveraging a “plug and play” approach.

Commercial autonomy architectures have since developed, which run onboard the vehicles, allowing machine learning and A.I algorithms to adapt to the environment, optimising the mission. The operator can focus on what the mission will do (Goal-Based Mission Planning) while the onboard A.I determines how the tasks will be done. Collaborative multi-vehicle missions have been demonstrated, where the autonomy installed on multiple vehicles work together to intelligently execute tasks.

The availability of reliable high bandwidth communications in the above-water environment permits a greater connectivity between the uncrewed systems and command teams. The operator can remotely pilot a vehicle, stream live sensor data and supervise multiple vehicles simultaneously. They have access to increased volumes of information from multiple sources which can impact timely decision making, and induce fatigue and information overload.

A multi-domain C2 system must be able to plan, monitor and re-plan missions for a large number of assets. This has led to the formalisation of common data models to describe the principle components of the mission. Defined software interface standards also allow a single common C2 system to plan and control many different vehicle types.

The availability of reliable communications above water between the C2 system and assets allow an operator to carry out complex missions without the requirement for advanced Autonomy or A.I. agents. In comparison, the lack of communications underwater has created the need for advanced Autonomy and A.I onboard the uncrewed vehicles in this domain. This capability has been successfully applied in constrained problem spaces such as Mine Counter Measures (MCM) operations but have yet to be used reliably in more complex scenarios.

Variable Autonomy, where decision making authority can flow seamlessly between the operator and the A.I, will reduce the reliance on high bandwidth persistent communications for complex missions, employing increased levels of A.I. both onboard the assets and within the C2 chain.

Emerging applications, such as offshore inspection, repair, maintenance and asset protection, involve more complexity and variability, and require a step change in the adaptability of the onboard Machine Learning and A.I algorithms, and a more fluid interaction with the operator(s) for complex decision making. The variable autonomy concept will be essential for these future operations.

The idea blends concepts from both the above and sub-surface spaces. A.I. will operate across the different layers of the network, functioning both as a smart decision aid when the operator is in control, but capable of transitioning to onboard decision making when communications falter. Rapid recommendations will be provided to the operator when the re-planning of multiple assets is required; it will also allow the uncrewed systems to revert to a more traditional “autonomy” paradigm when communications fail, with decisions made onboard the assets.

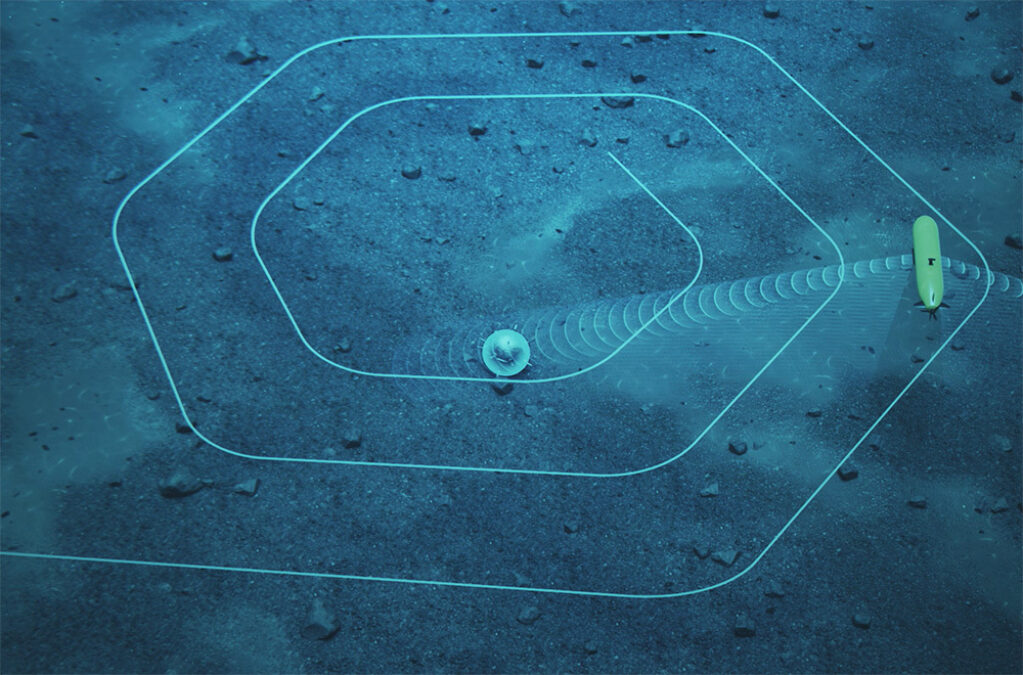

Image: UUV uses A.I to adapt the mission to inspect a possible man made target detected by a machine learning algorithm

The variable nature of the environment and threat context creates requirements around prediction so collaboration can continue when communications degrade. Current systems often initiate simple safety behaviours to abort the mission when communications drop, which reduces mission effectiveness but maintains operator trust. Prediction capabilities will allow the operator to maintain trust and situational awareness of the mission as communications fluctuate.

The operator and crewed and uncrewed assets must all maintain a “world model” view of the mission, building a common understanding of the environment. The system must be tolerant to these world models diverging as communications drop. Variable autonomy success also requires the ability to prioritise interactions and data exchanges based on the rules of engagement and communication limitations, and to transfer that data and instructions in an intelligent manner.

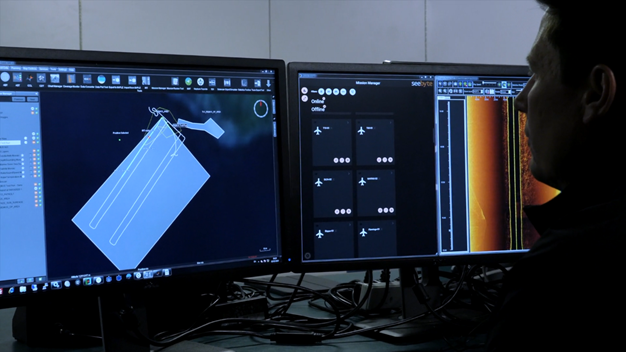

Image: SeeByte’s Data Core and SeeTrack tools maintains a “world model” of the mission to support the operators.

The User Experience (UX) must allow the operator to quickly visualise updates and predictions from many systems, along with recommendations from the A.I Agents(s). Operators must be able to fluidly interact with the assets to adapt mission plans as necessary. A.I. recommendations must be explainable, and provide rapid rehearsals of recommended solutions to ensure operator trust.

Variable Autonomy will enable a step change in the complexity of missions that can be currently executed by fully autonomous systems. It will couple the complex decision-making ability of the human operator, with the advanced Autonomy and A.I Agent’s ability to monitor vast volumes of data and rapidly propose, rehearse and present solutions to the changing environment and tactical situation. Machine learning algorithms will provide contextual information for decision making; A.I., operating across the different levels of the network, will propose task assignments for large numbers of assets based on operator input; the onboard Autonomy will provide a safety net, allowing the mission to continue to execute the command intent if communications are degraded.

Effective teaming requires that delegated authority can transition between the operator and A.I Agents in a trusted and consistent way. It creates the concept of an autonomy spectrum within which the operator and systems operate, with the classical C2 paradigm at one end and full A.I. enabled autonomy at the other. Allowing the threshold on where decisions are made to vary, and blurring the classic boundaries between human driven C2 and full A.I Agency will produce a more flexible solution suitable for the emerging problems that uncrewed systems are being considered for.